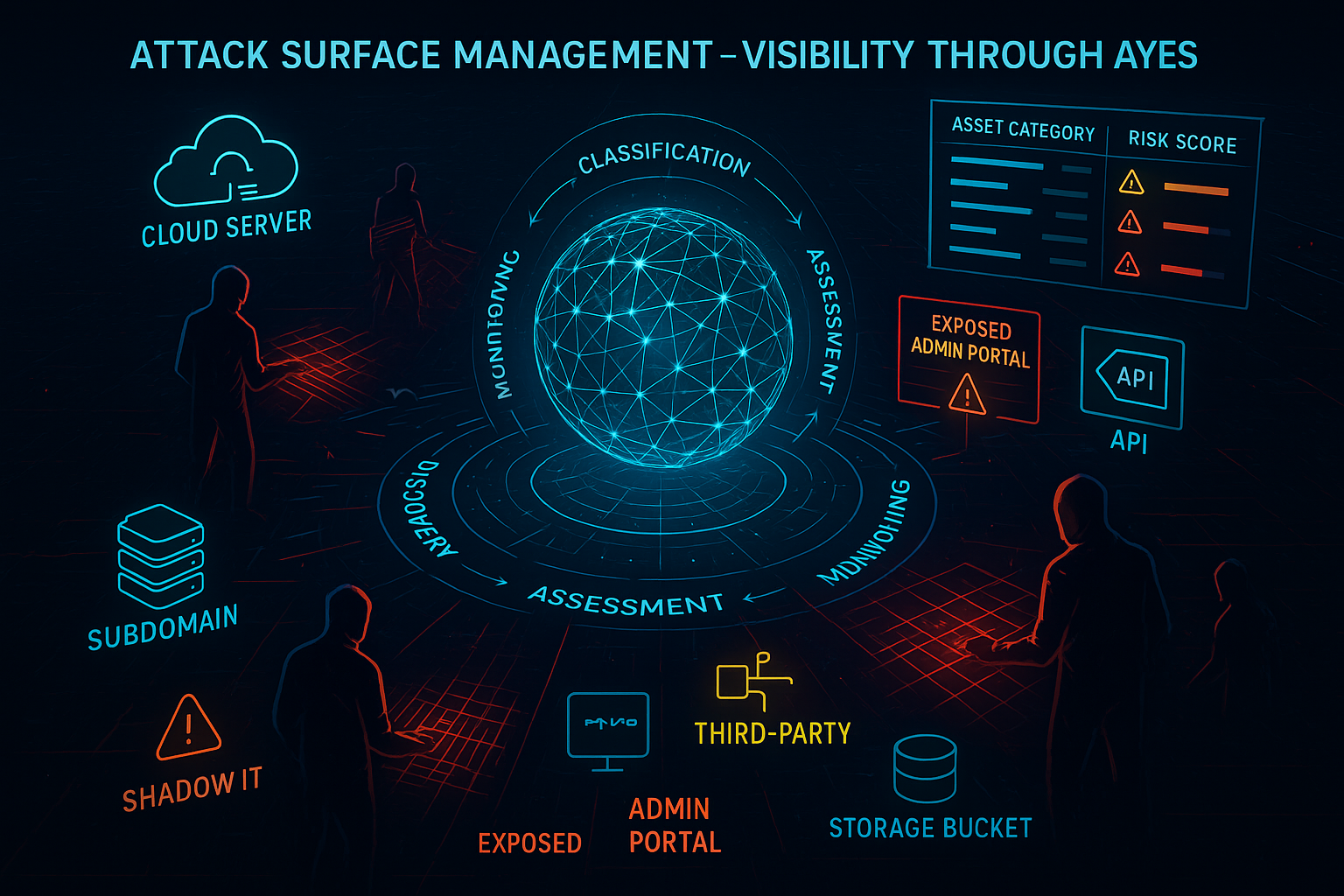

Attack Surface Management: Asset Discovery Methodologies and Techniques

ASM Asset Discovery Methodologies: Expert Guide to Network Reconnaissance

Introduction to Asset Discovery

Asset discovery forms the foundation of any effective Attack Surface Management program. The goal is to identify all internet-facing assets associated with your organization using the same techniques that attackers employ during their reconnaissance phase.

Modern asset discovery combines traditional network reconnaissance with advanced OSINT techniques, certificate transparency monitoring, and cloud-specific enumeration methods. The key is to see your organization’s digital footprint from an external perspective, without relying on internal documentation or assumptions.

Series Overview:

- Part 1: ASM Fundamentals - Core concepts and business case for Attack Surface Management

- Part 2: Asset Discovery Methodologies ← You are here

- Part 3: Asset Classification & Risk Assessment - Risk-based prioritization

- Part 4: Continuous Monitoring & Change Detection - Real-time monitoring and alerts

Passive Reconnaissance Techniques

Passive reconnaissance gathers information about your organization’s assets without directly interacting with target systems following PTES (Penetration Testing Execution Standard) guidelines. This approach is stealthy, doesn’t trigger security alerts, and often reveals more assets than active scanning methods documented in OWASP Testing Guide.

DNS Enumeration and Subdomain Discovery

DNS Record Analysis Every organization’s DNS infrastructure contains valuable intelligence about their digital assets per RFC 1034 specifications. Beyond the obvious A records, examine:

- MX Records: Mail servers and email infrastructure

- NS Records: Name servers and DNS delegation

- TXT Records: Domain verification, SPF, DKIM records

- CNAME Records: Aliases and service integrations

- SRV Records: Service locations and priorities

Subdomain Enumeration Techniques

The art of subdomain discovery lies in understanding how organizations structure their DNS namespaces. Most companies follow predictable patterns - development environments often use prefixes like “dev” or “staging”, while administrative interfaces commonly use terms like “admin” or “mgmt”. This predictability becomes a security weakness when these systems are inadvertently exposed to the internet.

Dictionary-Based Discovery Using common subdomain wordlists from SecLists and FuzzDB against your target domain:

# Using common subdomains

for sub in www api admin dev test staging mail ftp; do

dig +short ${sub}.example.com

done

This straightforward approach leverages the fact that most organizations use standard naming conventions documented in NIST SP 800-53 SC-2. However, the effectiveness depends entirely on the quality of your wordlist. I recommend maintaining industry-specific wordlists from Assetnote Wordlists that include terms relevant to your target’s business sector.

Recursive DNS Queries Many organizations use predictable naming conventions:

# Pattern-based discovery

for i in {1..10}; do

dig +short server${i}.example.com

dig +short app${i}.example.com

done

Pattern-based enumeration exploits the systematic approach most IT teams use for naming infrastructure. Web applications often scale horizontally with numbered instances (app1, app2, etc.), and server farms typically follow similar patterns. This technique has revealed entire server clusters that organizations forgot they exposed.

DNS Zone Transfers While rare, misconfigured DNS servers might allow zone transfers:

# Attempt zone transfer

dig @ns1.example.com example.com AXFR

Zone transfers represent one of the most critical DNS misconfigurations. When successful, they provide a complete map of an organization’s DNS infrastructure - essentially handing over the architectural blueprint of their internet-facing assets. While modern DNS servers typically restrict zone transfers, misconfigured secondary servers or internal DNS systems sometimes remain vulnerable.

Certificate Transparency Log Analysis

Certificate Transparency logs have revolutionized external asset discovery. Introduced to combat certificate fraud, these public logs inadvertently became a goldmine for reconnaissance. Every SSL/TLS certificate issued by a trusted Certificate Authority must be logged, creating an immutable record of an organization’s digital footprint.

Certificate Transparency (CT) logs accessed through services like crt.sh and Censys provide a public record of all SSL/TLS certificates issued for your domains. This goldmine of information often reveals:

- Forgotten subdomains discovered via Subfinder

- Development environments

- Internal applications accidentally exposed

- Third-party services using your domain

The beauty of CT logs lies in their comprehensiveness. Unlike traditional discovery methods that might miss non-standard ports or services, CT logs capture every certificate issued, regardless of the underlying service configuration. This has proven invaluable for discovering internal applications that developers accidentally exposed during testing.

CT Log Monitoring

# Query crt.sh for all certificates

curl -s "https://crt.sh/?q=%.example.com&output=json" | \

jq -r '.[].name_value' | \

sed 's/\*\.//g' | \

sort -u

The crt.sh interface provides an accessible way to query CT logs, but remember that multiple log operators exist. For comprehensive coverage, consider querying multiple CT log sources including Google’s Certificate Transparency, Comodo CT Search, and Facebook’s Certificate Transparency Monitor. Tools like Certstream provide real-time certificate monitoring.

Advanced CT Analysis Look for patterns in certificate data:

- Wildcard certificates (*.example.com)

- Development environments (dev.example.com)

- Service-specific certificates (api.example.com)

- Expired certificates (might indicate abandoned services)

Pattern analysis in CT data reveals organizational behavior and security practices. Wildcard certificates, while convenient for administrators, often indicate broader attack surfaces. Development-specific certificates frequently expose pre-production systems that lack proper security controls. Most concerning are expired certificates for active services - these indicate potential SSL/TLS vulnerabilities or automated certificate management failures.

Open Source Intelligence (OSINT) Gathering

OSINT gathering represents perhaps the most underutilized aspect of asset discovery among security teams. The same information that helps organizations market their services and attract talent also provides attackers with detailed intelligence about infrastructure, technology choices, and security practices.

Search Engine Reconnaissance Search engines index more than just web pages - they catalog DNS records, API endpoints, and configuration files:

Modern search engines have become sophisticated reconnaissance tools. Google’s crawlers index not just web content, but also exposed configuration files, error pages, and administrative interfaces. The key is understanding how to construct queries that reveal security-relevant information while filtering out noise.

Google Dorking Examples:

site:example.com filetype:pdf

site:example.com inurl:admin

site:example.com "index of"

site:example.com intitle:"login"

These Google dorks represent basic patterns documented in GHDB (Google Hacking Database), but effective OSINT requires understanding your target’s business context per OSINT Framework. Financial organizations might expose regulatory filings containing infrastructure details, while technology companies often reveal API documentation or internal tools through careless indexing.

Shodan and Censys Searches These specialized search engines scan the entire internet for services and devices:

# Shodan search examples

org:"Your Organization Name"

ssl:"example.com"

port:443 "example.com"

Shodan and Censys provide capabilities that traditional search engines cannot match. They actively probe internet-facing services, cataloging everything from web servers to industrial control systems. For asset discovery, these platforms excel at revealing services that organizations might not realize they’re exposing - database servers, administrative panels, and IoT devices that bypass traditional security perimeters.

Social Media and Public Repositories

- GitHub: Configuration files, API endpoints, infrastructure details

- LinkedIn: Technology stack information from job postings

- Twitter: Service announcements and infrastructure details

- Stack Overflow: Technical discussions revealing system details

Social media reconnaissance often provides the most current intelligence about an organization’s infrastructure. GitHub repositories frequently contain configuration files with hard-coded endpoints, while LinkedIn job postings reveal technology stack details that help focus discovery efforts. Developer discussions on Stack Overflow can expose specific software versions and configuration challenges.

Third-Party Service Discovery

Modern organizations rarely operate in isolation. Cloud services, content delivery networks, and third-party integrations create complex webs of interconnected infrastructure. Understanding these relationships is crucial for comprehensive asset discovery.

CDN and Cloud Service Enumeration Many organizations use CDNs and cloud services that may not be immediately obvious:

Content Delivery Networks complicate asset discovery by abstracting the underlying infrastructure. However, they also create new attack vectors and potential points of failure. Identifying CDN usage helps understand an organization’s performance priorities and potential geographic distribution of assets.

CloudFlare Detection:

# Check for CloudFlare protection

dig +short example.com | grep -E "104\.1[6-9]|104\.2[0-9]|104\.3[0-1]"

CloudFlare detection reveals more than just CDN usage - it indicates an organization’s approach to DDoS protection and web application security. Organizations behind CloudFlare often have additional security measures in place, but they may also have configuration weaknesses that bypass protection.

AWS S3 Bucket Discovery:

# Common S3 bucket naming patterns

curl -s https://companyname.s3.amazonaws.com/

curl -s https://companyname-backups.s3.amazonaws.com/

curl -s https://companyname-logs.s3.amazonaws.com/

S3 bucket discovery has evolved into a critical security practice due to the frequency of exposed buckets containing sensitive data documented in AWS Security Best Practices. Organizations often use predictable naming conventions that make bucket enumeration straightforward using tools like S3Scanner and CloudBrute. The risk extends beyond data exposure - misconfigured S3 buckets can provide insight into an organization’s AWS infrastructure and deployment practices.

Office 365 and Google Workspace:

- Check MX records for hosted email

- Look for federation endpoints

- Examine autodiscover records

Email service discovery reveals authentication infrastructure and potential attack vectors. Organizations using federated authentication often expose additional services and integration points that might not be immediately obvious from web application discovery alone.

Active Reconnaissance Techniques

Active reconnaissance involves directly interacting with discovered assets to gather additional information. This approach provides more detailed intelligence but may trigger security alerts.

While passive reconnaissance provides the foundation for asset discovery, active techniques are necessary to understand the actual security posture and functionality of discovered assets. The key is balancing information gathering with operational security - you want to learn as much as possible without triggering defensive measures that might alert security teams or block your reconnaissance activities.

Port Scanning and Service Enumeration

Port scanning remains a fundamental technique for understanding what services an organization exposes. However, modern network defenses have evolved significantly, requiring more nuanced approaches than simple port sweeps.

Strategic Port Scanning Focus on common web and application ports:

# Web services

nmap -sS -p 80,443,8080,8443,3000,5000,8000 example.com

# Common services

nmap -sS -p 21,22,23,25,53,80,110,143,443,993,995 example.com

# Extended scan for discovered hosts

nmap -sS -sV -T4 -p- discovered_hosts.txt

Effective port scanning requires understanding both the technical and business context of your target following PTES Technical Guidelines. Web applications typically expose standard HTTP/HTTPS ports, but modern microservices architectures often use non-standard ports for different services. The timing parameter (-T4) represents a balance between speed and stealth - too aggressive and you’ll trigger intrusion detection systems, too slow and you’ll never complete comprehensive coverage.

Service Version Detection Identify specific software versions and configurations:

# Service fingerprinting

nmap -sV -sC -p 80,443 example.com

# HTTP methods and headers

curl -I -X OPTIONS https://example.com

Service version detection provides crucial intelligence for vulnerability assessment against CVE Database and NVD. Specific software versions have known vulnerabilities documented in NIST National Vulnerability Database, and understanding the exact implementation helps focus subsequent security testing per OWASP Testing Guide. The -sC flag enables default scripts from Nmap Scripting Engine that can reveal additional configuration details, but use this cautiously as these scripts are more likely to trigger security alerts.

Web Application Discovery

Once you’ve identified web servers, the next phase involves understanding the application structure and functionality. Modern web applications are complex ecosystems with multiple entry points, APIs, and administrative interfaces.

Directory and File Enumeration Once web servers are identified, discover hidden content:

# Directory brute force

gobuster dir -u https://example.com -w /usr/share/wordlists/common.txt

# File extension discovery

gobuster dir -u https://example.com -w /usr/share/wordlists/common.txt -x php,asp,aspx,jsp

Directory enumeration success depends heavily on wordlist quality from Dirbuster and Gobuster and understanding the target’s technology stack per OWASP Web Security Testing Guide. Applications built with different frameworks expose different types of files and directories documented in Web Application Hacker’s Handbook. PHP applications might expose .php files in predictable locations per PHP Security Guide, while .NET applications use different patterns following Microsoft Security Development Lifecycle. The key is adapting your approach based on the technology stack revealed during initial reconnaissance using Wappalyzer.

API Endpoint Discovery Modern applications extensively use APIs:

# Common API patterns

curl -s https://example.com/api/v1/

curl -s https://example.com/rest/

curl -s https://example.com/graphql/

API discovery has become increasingly important as organizations adopt microservices architectures. APIs often lack the same access controls as web interfaces, and they frequently expose more functionality than intended. GraphQL endpoints are particularly interesting because they often provide schema introspection capabilities that reveal the entire API structure.

Technology Stack Identification Understand the underlying technology:

- HTTP Headers: Server, X-Powered-By, X-Frame-Options

- Response Patterns: Error messages, default pages

- File Extensions: .php, .asp, .jsp reveal technology

- URL Structures: Framework-specific patterns

Technology stack identification guides subsequent reconnaissance efforts using tools like Wappalyzer and BuiltWith. Each technology has characteristic vulnerabilities documented in MITRE CWE, configuration files, and administrative interfaces. Understanding whether an application uses WordPress, Django, or custom development helps focus discovery efforts on the most likely attack vectors.

SSL/TLS Certificate Analysis

SSL/TLS certificates provide a wealth of information beyond just enabling encrypted communications. Certificate details reveal organizational structure, infrastructure relationships, and security practices.

Certificate Information Extraction SSL certificates reveal valuable information:

# Certificate details

echo | openssl s_client -connect example.com:443 2>/dev/null | \

openssl x509 -noout -text

# Subject Alternative Names

echo | openssl s_client -connect example.com:443 2>/dev/null | \

openssl x509 -noout -text | grep -A1 "Subject Alternative Name"

Certificate analysis often reveals more assets than any other single technique. Subject Alternative Names (SANs) frequently contain internal hostnames, development environments, and related services that aren’t discoverable through traditional DNS enumeration. Organizations often use wildcard certificates or multi-domain certificates that expose their entire infrastructure naming conventions.

Certificate Chain Analysis

- Issuing Certificate Authority

- Intermediate certificates

- Root certificate trust path

- Certificate transparency compliance

Certificate chain analysis reveals organizational security practices and potential trust relationships. Organizations that use internal Certificate Authorities might expose additional infrastructure, while those using specific commercial CAs might indicate security budgets and risk tolerances.

Advanced Discovery Techniques

Modern infrastructure complexity requires sophisticated discovery approaches that go beyond traditional network reconnaissance. Cloud services, containerization, and microservices architectures create new attack surfaces that require specialized techniques.

Cloud-Specific Enumeration

Cloud platforms have fundamentally changed how organizations deploy and manage infrastructure. Each major cloud provider has unique services and configuration patterns that require specialized discovery approaches.

Amazon Web Services (AWS)

# S3 bucket enumeration

aws s3 ls s3://company-name/ --no-sign-request

aws s3 ls s3://company-backups/ --no-sign-request

# CloudFront distributions

dig +short d1234567890.cloudfront.net

# EC2 instance metadata (if accessible)

curl -s http://169.254.169.254/latest/meta-data/

AWS discovery focuses on identifying misconfigured services that might expose sensitive data or provide unauthorized access. S3 buckets represent the most common cloud misconfiguration, but organizations also expose databases, message queues, and compute instances through improper access controls. The EC2 metadata service is particularly valuable if accessible, as it provides detailed information about the instance’s configuration and permissions.

Microsoft Azure

# Azure blob storage

curl -s https://companyname.blob.core.windows.net/

curl -s https://companyname.file.core.windows.net/

# Azure AD discovery

curl -s https://login.microsoftonline.com/companyname.com/.well-known/openid_configuration

Azure discovery often reveals identity infrastructure and business applications. Azure Active Directory configurations frequently expose tenant information and authentication endpoints that provide insight into an organization’s identity architecture. Blob storage discovery follows similar patterns to AWS S3, but Azure’s integration with Office 365 creates additional exposure points.

Google Cloud Platform

# GCP storage buckets

curl -s https://storage.googleapis.com/companyname/

curl -s https://companyname.storage.googleapis.com/

# App Engine applications

curl -s https://companyname.appspot.com/

GCP discovery benefits from Google’s consistent naming conventions and service integration. App Engine applications often use predictable subdomain patterns, while Cloud Storage buckets follow similar enumeration patterns to other cloud providers. GCP’s emphasis on containerization means that identifying container registries and Kubernetes clusters becomes particularly important.

Container and Microservices Discovery

Containerization has transformed application deployment, creating new discovery challenges and opportunities. Container registries, orchestration platforms, and service meshes represent new attack surfaces that require specialized reconnaissance techniques.

Docker Registry Enumeration

# Public Docker registries

curl -s https://registry.hub.docker.com/v2/companyname/

curl -s https://quay.io/api/v1/repository/companyname/

# Private registries

curl -s https://registry.example.com/v2/_catalog

Container registry discovery reveals development practices, internal applications, and infrastructure details. Organizations often use predictable naming conventions for container images, and registry APIs frequently expose more information than intended. Private registries are particularly valuable because they often contain internal applications and configuration details that aren’t available elsewhere.

Kubernetes API Discovery

# Kubernetes API endpoints

curl -s https://kubernetes.example.com/api/v1/

curl -s https://k8s.example.com/api/v1/namespaces/

Kubernetes discovery focuses on identifying exposed API endpoints and dashboard interfaces. Misconfigured Kubernetes clusters often expose significant functionality to unauthenticated users, providing detailed information about running applications and infrastructure configuration. The API server discovery is particularly important because it can reveal the entire cluster architecture.

Mobile Application Asset Discovery

Mobile applications create unique discovery challenges because they often expose backend services and APIs that aren’t accessible through traditional web reconnaissance.

Mobile App Analysis

- iOS App Store: Search for organization’s applications

- Google Play Store: Identify Android applications

- APK Analysis: Extract API endpoints and server URLs

- iOS IPA Analysis: Discover backend services

Mobile application discovery requires understanding how applications interact with backend services. Application stores provide obvious starting points, but static analysis of application packages often reveals hardcoded URLs, API endpoints, and service configurations that provide additional attack surface intelligence.

Backend Service Discovery Mobile applications often reveal backend APIs:

# Common mobile API patterns

curl -s https://api.example.com/mobile/v1/

curl -s https://mobile-api.example.com/v1/

curl -s https://app.example.com/api/

Mobile backend discovery focuses on identifying APIs that support mobile applications. These APIs often have different security controls than web applications and may expose functionality that isn’t available through other interfaces. The versioning in API URLs (v1, v2) also provides insight into application evolution and potential legacy endpoints.

Automated Discovery Tools

Manual asset discovery techniques provide deep understanding and flexibility, but they don’t scale to the requirements of modern enterprise environments. Organizations with extensive digital footprints need automated solutions that can continuously discover assets, track changes, and integrate with existing security workflows.

Commercial Solutions

Commercial Asset Surface Management platforms have matured significantly, offering comprehensive solutions that combine multiple discovery techniques with advanced analytics and threat intelligence integration.

Specialized ASM Platforms

- Rapid7 InsightASM: Comprehensive external asset discovery

- Censys ASM: Internet-wide asset monitoring

- RiskIQ Digital Footprint: Brand and infrastructure monitoring

- Shodan Enterprise: Global device and service discovery

- Echelon: Cybersecurity check-up solution

These platforms excel at providing continuous, comprehensive coverage that manual techniques cannot match. They combine passive reconnaissance with active scanning, integrate threat intelligence feeds, and provide risk prioritization based on real-world threat data. However, they require significant investment and may not provide the granular control that security teams need for specialized reconnaissance activities.

Cloud Security Platforms

- Wiz: Cloud asset discovery and security

- Prisma Cloud: Multi-cloud asset visibility

- Lacework: Cloud infrastructure monitoring

Cloud security platforms focus specifically on cloud asset discovery and security posture management. They provide deeper integration with cloud APIs and service-specific reconnaissance capabilities that general-purpose ASM platforms might miss. The advantage is comprehensive cloud coverage; the limitation is reduced visibility into on-premises or hybrid infrastructure.

Open Source Tools

Open source tools provide flexibility and cost-effectiveness for organizations that need customizable discovery capabilities. They also offer transparency into discovery methodologies and can be adapted for specific organizational requirements.

DNS Enumeration

- Amass: Comprehensive subdomain enumeration

- Subfinder: Fast subdomain discovery

- Assetfinder: Simple asset discovery

- Sublist3r: Multi-source subdomain enumeration

These tools represent different approaches to DNS enumeration, each with strengths and limitations. Amass provides the most comprehensive coverage but requires significant time and resources. Subfinder focuses on speed and efficiency, making it ideal for continuous monitoring. The key is understanding which tool fits your specific use case and resource constraints.

Web Discovery

- Httpx: HTTP/HTTPS probe utility

- Nuclei: Vulnerability scanner with discovery templates

- Aquatone: Web application discovery

- Gobuster: Directory and file brute forcing

Web discovery tools have evolved to handle modern web applications with complex architectures. Httpx provides efficient HTTP probing capabilities, while Nuclei combines discovery with vulnerability detection. The integration capabilities of these tools allow for sophisticated discovery pipelines that can adapt to different organizational requirements.

Port Scanning

- Nmap: Network discovery and security auditing

- Masscan: High-speed port scanner

- RustScan: Modern port scanner

Port scanning tool selection depends on your specific requirements for speed, stealth, and feature completeness. Nmap provides comprehensive capabilities with extensive scripting support, while Masscan offers high-speed scanning for large-scale discovery. RustScan represents the new generation of tools that prioritize performance and modern development practices.

Discovery Automation and Orchestration

Effective asset discovery requires more than just running tools - it requires orchestrating multiple techniques, managing data flows, and integrating with existing security operations. Modern discovery automation focuses on creating repeatable, scalable processes that can adapt to changing infrastructure.

Automated Discovery Pipelines

Building effective discovery pipelines requires understanding the relationships between different discovery techniques and how they complement each other. The most effective approaches combine passive techniques for comprehensive coverage with active techniques for detailed analysis.

Continuous Discovery Workflow

#!/bin/bash

# ASM Discovery Pipeline

DOMAIN=$1

DATE=$(date +%Y%m%d)

OUTPUT_DIR="./discovery_${DATE}"

mkdir -p ${OUTPUT_DIR}

echo "[+] Starting ASM discovery for ${DOMAIN}"

# Phase 1: Passive discovery

echo "[+] Certificate transparency logs"

curl -s "https://crt.sh/?q=%.${DOMAIN}&output=json" | \

jq -r '.[].name_value' | sort -u > ${OUTPUT_DIR}/ct_logs.txt

echo "[+] Subdomain enumeration"

subfinder -d ${DOMAIN} -o ${OUTPUT_DIR}/subdomains.txt

amass enum -passive -d ${DOMAIN} -o ${OUTPUT_DIR}/amass.txt

# Phase 2: Active discovery

echo "[+] Live host detection"

cat ${OUTPUT_DIR}/*.txt | sort -u | \

httpx -silent -o ${OUTPUT_DIR}/live_hosts.txt

echo "[+] Service enumeration"

nmap -sS -sV -T4 -p 80,443,8080,8443 \

-iL ${OUTPUT_DIR}/live_hosts.txt \

-oN ${OUTPUT_DIR}/nmap_scan.txt

echo "[+] Discovery complete. Results in ${OUTPUT_DIR}/"

This pipeline demonstrates the progression from passive to active discovery techniques. The passive phase gathers comprehensive asset lists without triggering security alerts, while the active phase focuses on understanding the services and applications running on discovered assets. The key is ensuring that each phase informs the next, creating a focused and efficient discovery process.

Scheduled Discovery

# Crontab entry for daily discovery

0 2 * * * /opt/asm/discovery_pipeline.sh example.com

Scheduled discovery ensures that asset intelligence remains current in dynamic environments. The frequency depends on how quickly your organization’s infrastructure changes and the criticality of maintaining current asset intelligence. Daily discovery works for most organizations, but high-change environments might require more frequent updates.

Integration with Security Tools

Asset discovery becomes most valuable when integrated with existing security operations. The goal is to ensure that newly discovered assets immediately enter security workflows for assessment, monitoring, and protection.

SIEM Integration Feed discovered assets into security monitoring:

{

"timestamp": "2024-01-15T10:30:00Z",

"event_type": "asset_discovered",

"asset_url": "https://new-app.example.com",

"discovery_method": "certificate_transparency",

"risk_score": 7,

"requires_assessment": true

}

SIEM integration ensures that security teams receive immediate notification of newly discovered assets. The structured data format allows for automated processing and correlation with other security events. Risk scoring helps prioritize response efforts and ensures that high-risk discoveries receive immediate attention.

Vulnerability Scanner Integration Automatically scan newly discovered assets:

# Feed discoveries to vulnerability scanners

cat new_assets.txt | while read asset; do

nessus_scan.py --target $asset --policy "ASM Discovery"

done

Vulnerability scanner integration creates a continuous security assessment capability. Newly discovered assets automatically enter vulnerability assessment workflows, ensuring that security teams understand the risk posture of all organizational assets. This integration is particularly important for identifying assets that might have been deployed without proper security controls.

Discovery Best Practices

Effective asset discovery requires balancing comprehensive coverage with operational constraints. The most successful programs establish clear processes, maintain appropriate documentation, and regularly evaluate their effectiveness.

Operational Guidelines

Scope Management Clear scope definition prevents discovery activities from impacting unauthorized systems while ensuring comprehensive coverage of organizational assets.

- Define clear boundaries for discovery activities

- Respect third-party terms of service

- Avoid aggressive scanning of partner systems

- Document all discovery activities

Scope management becomes particularly important when dealing with cloud infrastructure, third-party services, and partner integrations. Organizations must balance comprehensive discovery with respect for external systems and legal constraints.

Rate Limiting Responsible discovery practices prevent overwhelming target systems while maintaining effective intelligence gathering.

- Implement reasonable delays between requests

- Use distributed scanning to avoid overwhelming targets

- Monitor for blocking or rate limiting responses

- Adjust scan intensity based on target responsiveness

Rate limiting demonstrates professional responsibility and helps maintain the effectiveness of discovery operations. Aggressive scanning often triggers defensive measures that reduce the overall effectiveness of reconnaissance efforts.

Data Management Proper data management ensures that discovery intelligence remains useful and actionable while protecting sensitive information.

- Maintain historical discovery records

- Track asset lifecycle (discovered, verified, deprecated)

- Implement data retention policies

- Secure sensitive discovery data

Data management becomes increasingly important as discovery programs mature and generate large volumes of intelligence. Historical data provides context for understanding changes and trends, while proper security controls protect sensitive reconnaissance information.

Quality Assurance

Discovery quality directly impacts the effectiveness of subsequent security operations. False positives waste resources, while false negatives create security gaps.

Validation Processes Systematic validation ensures that discovery results are accurate and actionable.

- Verify discovered assets belong to your organization

- Confirm asset ownership through multiple sources

- Validate discovery tool accuracy

- Cross-reference findings across multiple tools

Validation processes become particularly important when dealing with cloud services, CDNs, and third-party integrations that might generate false positives. Multi-source validation helps ensure that discovery results are both accurate and actionable.

False Positive Management Effective false positive management improves the signal-to-noise ratio of discovery operations.

- Maintain whitelists of known false positives

- Filter CDN endpoints and shared infrastructure

- Exclude third-party services you don’t control

- Regular review of filtered results

False positive management requires ongoing attention and regular review. Cloud services and CDNs frequently change their IP ranges and service configurations, requiring updates to filtering rules and validation processes.

Common Discovery Challenges

Understanding common challenges helps organizations prepare for and overcome obstacles that might limit discovery effectiveness.

Technical Challenges

Dynamic Infrastructure Modern infrastructure changes rapidly, creating challenges for traditional discovery approaches.

- Cloud resources with changing IP addresses

- Ephemeral containers and serverless functions

- Auto-scaling applications

- Temporary development environments

Dynamic infrastructure requires discovery approaches that can adapt to rapid changes and short-lived resources. Traditional discovery techniques that focus on static infrastructure may miss significant portions of modern application architecture.

Anti-Reconnaissance Measures Organizations increasingly implement defensive measures that can limit discovery effectiveness.

- Rate limiting and request blocking

- Geolocation restrictions

- User-agent filtering

- Honeypots and deception technology

Anti-reconnaissance measures require adaptive discovery approaches that can work within defensive constraints while maintaining comprehensive coverage. The key is understanding these measures and adapting techniques accordingly.

Organizational Challenges

Asset Ownership Complex organizational structures create challenges for understanding asset ownership and responsibility.

- Unclear responsibility for discovered assets

- Shadow IT and unauthorized deployments

- Acquired companies with separate infrastructure

- Partner and vendor-managed services

Asset ownership challenges require clear processes for validating and assigning responsibility for discovered assets. This becomes particularly important when discovery reveals assets that don’t match organizational expectations.

Resource Constraints Limited resources can impact the comprehensiveness and effectiveness of discovery operations.

- Limited time for comprehensive discovery

- Lack of specialized discovery tools

- Insufficient skilled personnel

- Budget constraints for commercial solutions

Resource constraints require prioritization and focus on the most critical assets and highest-risk exposures. The key is balancing comprehensive coverage with available resources and organizational priorities.

Measuring Discovery Effectiveness

Effective asset discovery programs require ongoing measurement and optimization. Without proper metrics, organizations cannot understand whether their discovery efforts are comprehensive, efficient, or aligned with security objectives.

Key Metrics

Coverage Metrics

- Total assets discovered vs. known assets

- New asset discovery rate

- Discovery method effectiveness

- Time to discovery for new deployments

Coverage metrics help organizations understand the comprehensiveness of their discovery efforts. The gap between discovered and known assets indicates potential blind spots, while discovery rate trends reveal whether programs are keeping pace with infrastructure changes.

Quality Metrics

- False positive rate

- Asset verification accuracy

- Discovery tool reliability

- Coverage completeness

Quality metrics ensure that discovery efforts produce actionable intelligence. High false positive rates waste resources and reduce confidence in discovery results, while poor verification accuracy can lead to security gaps or unnecessary remediation efforts.

Continuous Improvement

Regular Assessment

- Monthly review of discovery effectiveness

- Quarterly tool performance evaluation

- Annual methodology updates

- Continuous threat landscape monitoring

Regular assessment ensures that discovery programs remain effective as infrastructure and threat landscapes evolve. Monthly reviews help identify immediate issues, while annual methodology updates ensure alignment with emerging threats and technologies.

Tool Optimization

- Refine discovery parameters based on results

- Integrate new discovery techniques

- Optimize automation workflows

- Enhance integration capabilities

Tool optimization focuses on improving the efficiency and effectiveness of discovery operations. This includes refining scanning parameters, integrating new discovery techniques, and optimizing automation workflows to reduce manual effort and improve coverage.

Next Steps

Asset discovery provides the foundation for effective Attack Surface Management, but raw discovery data requires processing and analysis. The next article in this series will cover asset classification and risk assessment, showing how to prioritize discovered assets and focus security efforts on the highest-risk exposures.

Coming Next: Attack Surface Management: Asset Classification and Risk Assessment

Key Takeaways

- Comprehensive Approach: Combine passive and active techniques for complete asset discovery

- Continuous Process: Regular discovery identifies new assets and changes to existing ones

- Tool Diversification: Use multiple tools and techniques to maximize coverage

- Automation Essential: Manual discovery doesn’t scale for modern infrastructure

- Validation Critical: Verify discovered assets and filter false positives

Continue with Part 3: Asset Classification and Risk Assessment to learn how to prioritize discovered assets and focus security efforts effectively.